Epoll and IO Multiplexing

August 15, 2021 · 834 words · 4 min · OS Linux Network IO

Let’s start with epoll.

epoll is an I/O event notification mechanism in the Linux kernel, designed to replace select and poll. It aims to efficiently handle large numbers of file descriptors and supports the system’s maximum file open limit, providing excellent performance.

Usage

API

epoll has three primary system calls:

/** epoll_create

* Creates an epoll instance and returns a file descriptor for it.

* Needs to be closed afterward, as epfd also consumes the system's fd resources.

* size: Indicates the number of file descriptors to be monitored by epfd.

*/

int epoll_create(int size);

/** epoll_ctl

* Adds or modifies a file descriptor to be monitored by epoll.

* epfd: The epoll file descriptor.

* op: Operation type.

* EPOLL_CTL_ADD: Add a new fd to epfd.

* EPOLL_CTL_MOD: Modify an already registered fd.

* EPOLL_CTL_DEL: Remove an fd from epfd.

* fd: The file descriptor to be monitored.

* event: Specifies the type of event to be monitored.

* EPOLLIN: Indicates the fd is ready for reading (including when the peer socket is closed).

* EPOLLOUT: Indicates the fd is ready for writing.

* EPOLLPRI: Indicates urgent data can be read.

* EPOLLERR: Indicates an error occurred on the fd.

* EPOLLHUP: Indicates the fd has been hung up.

* EPOLLET: Sets epoll to Edge Triggered (ET) mode, as opposed to Level Triggered (LT).

* EPOLLONESHOT: Only listen for the event once. If continued monitoring is required, the socket must be re-added to epfd.

*/

int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event);

/** epoll_wait

* Collects events that have been triggered and returns the number of triggered events.

* epfd: The epoll file descriptor.

* events: Array of epoll events that will be populated with triggered events.

* maxevents: Indicates the size of the events array.

* timeout: Timeout duration; 0 returns immediately, -1 blocks indefinitely, >0 waits for the specified duration.

*/

int epoll_wait(int epfd, struct epoll_event *events, int maxevents, int timeout);

Processing Flow

epoll_create

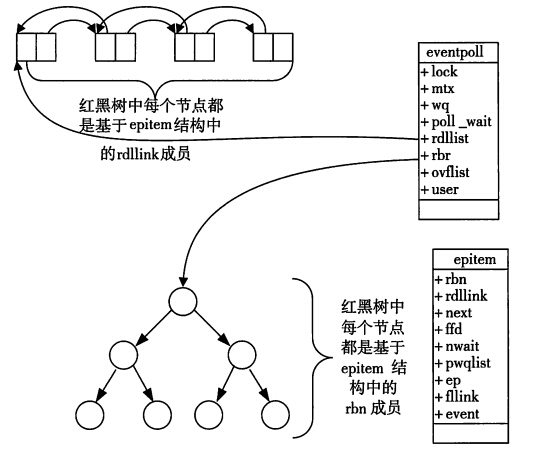

When a process calls epoll_create, the Linux kernel creates an eventpoll structure:

struct eventpoll {

spinlock_t lock;

struct mutex mtx;

wait_queue_head_t wq;

wait_queue_head_t poll_wait;

struct list_head rdllist;

struct rb_root rbr;

struct epitem *ovflist;

struct user_struct *user;

struct file *file;

int visited;

struct list_head visited_list_link;

};

During epoll_create, epoll registers a red-black tree with the kernel, which is used to store the monitored sockets. When epoll_create is called, a file node is created in this red-black tree, and the corresponding socket fd is registered.

Additionally, a doubly linked list is created to store events that are ready.

epoll_ctl

For each monitored event, an epitem structure is created:

struct epitem {

struct rb_node rbn;

struct list_head rdllink;

struct epitem *next;

struct epoll_filefd ffd;

int nwait;

struct list_head pwqlist;

struct eventpoll *ep;

struct list_head fllink;

struct epoll_event event;

};

When epoll_ctl is called, the socket fd is registered to the eventpoll’s red-black tree, and a callback function is registered with the kernel interrupt handler. When an interrupt occurs for the fd, it is added to the ready list.

epoll_wait

When epoll_wait is called, it simply checks if there is data in the list of ready events (epitem). If there is data, it returns immediately; otherwise, it sleeps until either data arrives or the timeout expires.

Epoll Usage Model

for (;;) {

nfds = epoll_wait(epfd, events, 20, 500);

for (i = 0; i < nfds; ++i) {

if (events[i].data.fd == listenfd) {

connfd = accept(listenfd, (sockaddr *)&clientaddr, &clilen);

ev.data.fd = connfd;

ev.events = EPOLLIN | EPOLLET;

epoll_ctl(epfd, EPOLL_CTL_ADD, connfd, &ev);

}

else if (events[i].events & EPOLLIN) {

n = read(sockfd, line, MAXLINE);

ev.data.ptr = md;

ev.events = EPOLLOUT | EPOLLET;

epoll_ctl(epfd, EPOLL_CTL_MOD, sockfd, &ev);

}

else if (events[i].events & EPOLLOUT) {

struct myepoll_data *md = (myepoll_data *)events[i].data.ptr;

sockfd = md->fd;

send(sockfd, md->ptr, strlen((char *)md->ptr), 0);

ev.data.fd = sockfd;

ev.events = EPOLLIN | EPOLLET;

epoll_ctl(epfd, EPOLL_CTL_MOD, sockfd, &ev);

}

else {

// Other processing

}

}

}

Blocking IO, Non-blocking IO, and IO Multiplexing

Blocking IO

Blocking IO means that a thread waits for data to arrive, releasing the CPU until the data is available. When data arrives, the thread is rescheduled to run.

In scenarios with many read/write requests, frequent context switching and thread scheduling can lead to inefficiency.

Non-blocking IO

In non-blocking IO, a user thread makes an IO request, and if data is not yet available, it returns immediately. The thread must keep checking until the data is ready, at which point it can proceed.

Non-blocking IO has a significant drawback: the thread must constantly poll, which can lead to high CPU usage.

IO Multiplexing

Blocking IO occupies resources, and excessive context switching can be inefficient. Non-blocking IO can lead to high CPU utilization due to constant polling.

IO multiplexing manages multiple file descriptors in a single thread, reducing context switching and idle CPU usage. Mechanisms like select, poll, and epoll were developed to implement this concept, with epoll being the most scalable and efficient.