CPU False Sharing

May 2, 2021 · 566 words · 3 min · CPU Cache

The motivation for this post comes from an interview question I was asked: What is CPU false sharing?

CPU Cache

Let’s start by discussing CPU cache.

CPU cache is a type of storage medium introduced to bridge the speed gap between the CPU and main memory. In the pyramid-shaped storage hierarchy, it is located just below CPU registers. Its capacity is much smaller than that of main memory, but its speed can be close to the processor’s frequency.

The effectiveness of caching relies on the principle of temporal and spatial locality.

When the processor issues a memory access request, it first checks if the requested data is in the cache. If it is (a cache hit), it directly returns the data without accessing main memory. If it isn’t (a cache miss), it loads the data from main memory into the cache before returning it to the processor.

CPU Cache Architecture

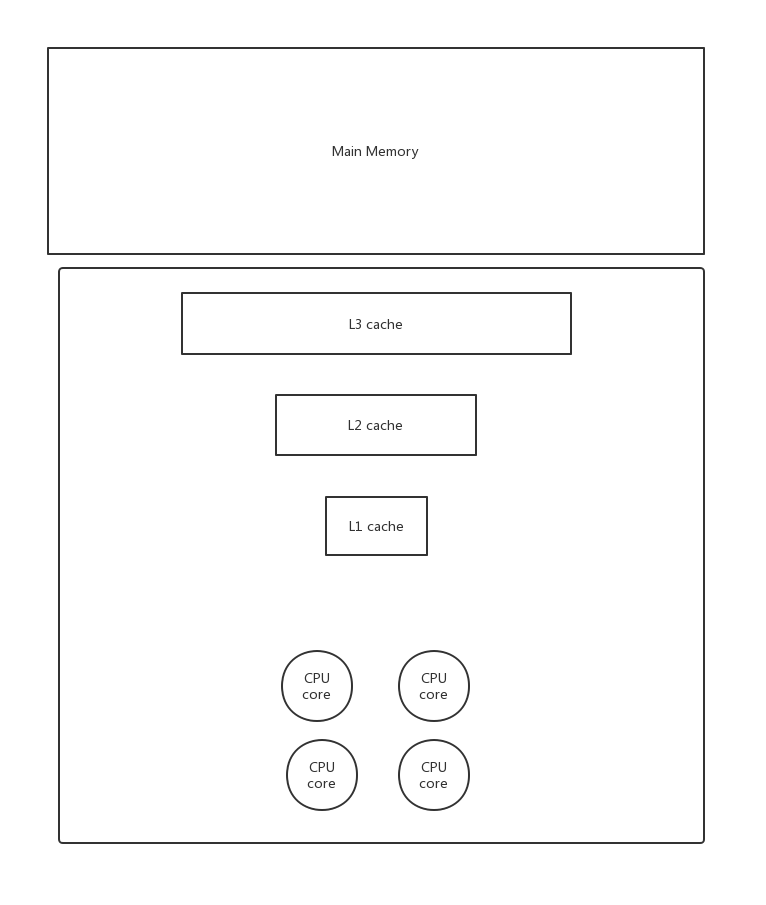

There are usually three levels of cache between the CPU and main memory. The closer the cache is to the CPU, the faster it is but the smaller its capacity. When accessing data, the CPU first checks L1, then L2, and finally L3. If the data isn’t in any of these caches, it must be fetched from main memory.

- L1 is close to the CPU core that uses it. L1 and L2 caches can only be used by a single CPU core.

- L3 can be shared by all CPU cores in a socket.

CPU Cache Line

Caches operate on the basis of cache lines, which are the smallest unit of data transfer between the cache and main memory, typically 64 bytes. A cache line effectively references a block of memory (64 bytes).

Loading a cache line has the advantage that if the required data is located close to each other, it can be accessed without reloading the cache.

However, it can also lead to a problem known as CPU false sharing.

CPU False Sharing

Consider this scenario:

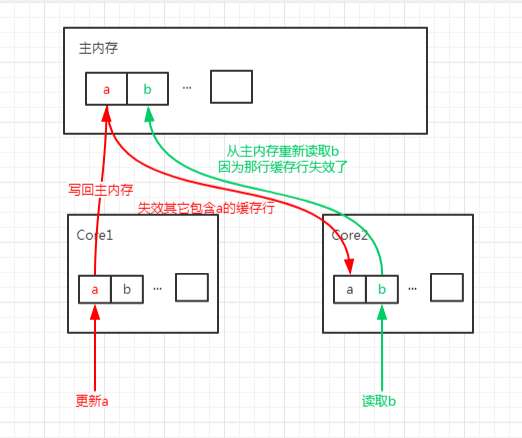

- We have a

longvariablea, which is not part of an array but is a standalone variable, and there’s anotherlongvariablebright next to it. Whenais loaded,bis also loaded into the cache line for free. - Now, a thread on one CPU core modifies

a, while another thread on a different CPU core readsb. - When

ais modified, bothaandbare loaded into the cache line of the modifying core, and after updatinga, all other cache lines containingabecome invalid, since they no longer hold the latest value ofa. - When the other core reads

b, it finds that the cache line is invalid and must reload it from main memory.

Because the cache operates at the level of cache lines, invalidating a’s cache line also invalidates b, and vice versa.

This causes a problem:

b and a are completely unrelated, but each time a is updated, b has to be reloaded from main memory due to a cache miss, slowing down the process.

CPU false sharing: When multiple threads modify independent variables that share the same cache line, they unintentionally affect each other’s performance. This is known as false sharing.

Avoiding CPU False Sharing

- Ensure that memory for different variables is not placed adjacently.

- Align variables during compilation to avoid false sharing. See data structure alignment.